Machine Learning Methods to Understand how Predictors of Achievement Change over Time: Methodological Challenges, Solutions, and Future Directions

Wed-02

Presented by: Rosa Lavelle-Hill

The rate of scientific publication is growing exponentially, posing an information overload problem, not only for researchers trying to digest it, but also for policy makers and practitioners looking to effectively distribute resources or design interventions. Meta-analyses and systematic reviews are useful summarizing methods; yet, they typically collate findings from piecemeal studies looking at the effect of a handful of features on a specific outcome. In Educational Psychology, researchers invest in collecting large scale longitudinal survey data, but rarely try to model all available information at once to make full use of the dataset and pitt features against each other. Machine learning methodologies enable the modeling of hundreds of features and their (non-linear) interactions simultaneously, whilst preventing overfitting --- allowing the research community to better understand what the most important predictors of an outcome are.

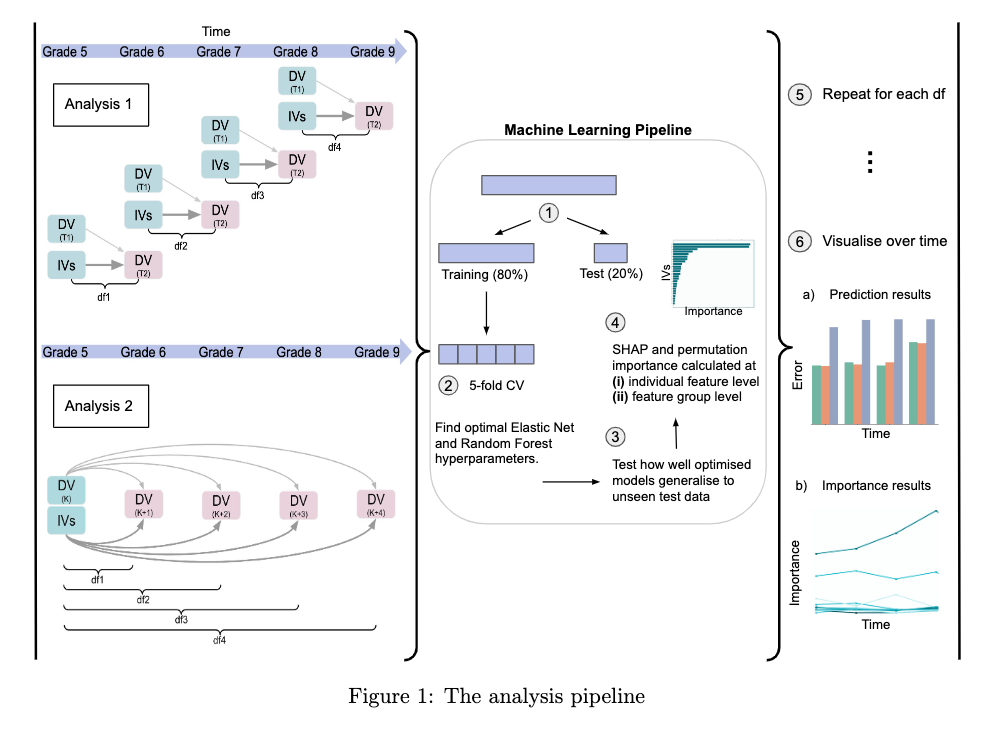

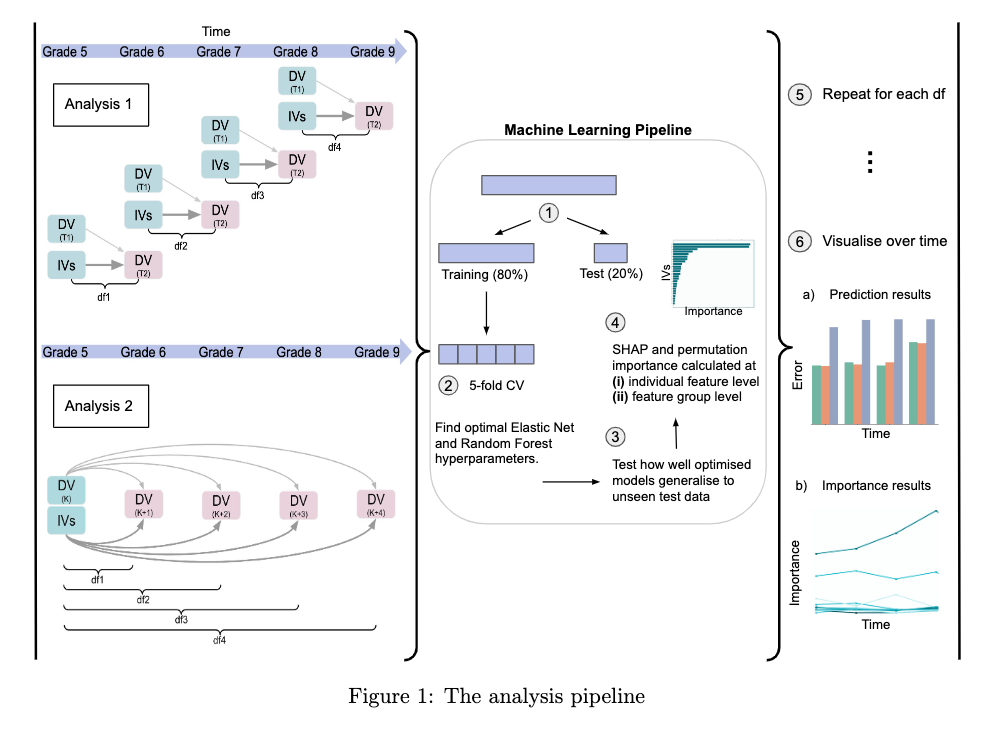

In this work, we employ a machine learning approach to predict math achievement for German secondary school students (N=3,425) and comparatively assess the importance of 104 different features (or groups of features) (see Figure 1). We found that an elastic net regression could predict math achievement better than a mean baseline model up to 4 years into the future, with prediction R squared ranging from 0.49 to 0.78. The information collected via surveys (motivation, emotion, expectations, self-concept, demographics, SES, cognitive strategies, and reports on classroom and home environments), school grades, and cognitive tests (IQ) together explained an additional 0.03-0.12 variance, beyond students’ prior ability scores. When predicting the subsequent year’s achievement, prior ability becomes even more predictive as students progress through the schooling years.

Modeling large survey data with an algorithmic approach, however, is not without its difficulties. In this talk we will also discuss the challenges of using machine learning on panel survey data where (i) there are only a few time points, (ii) a nested data structure (students within classes and schools), (iii) measurement error (associated with the self-report and test measures of psychological constructs), (iv) missing data due to drop-out over time, and (v) effects of multicollinearity on the feature importance analysis.

To conclude, machine learning methodologies offer Educational Psychology the potential for new insights via the comparative assessment of predictive effects, as well as how they vary over time. However, there is still work to be done in designing machine learning pipelines which deal well with the aforementioned challenges that come with large scale survey data.

In this work, we employ a machine learning approach to predict math achievement for German secondary school students (N=3,425) and comparatively assess the importance of 104 different features (or groups of features) (see Figure 1). We found that an elastic net regression could predict math achievement better than a mean baseline model up to 4 years into the future, with prediction R squared ranging from 0.49 to 0.78. The information collected via surveys (motivation, emotion, expectations, self-concept, demographics, SES, cognitive strategies, and reports on classroom and home environments), school grades, and cognitive tests (IQ) together explained an additional 0.03-0.12 variance, beyond students’ prior ability scores. When predicting the subsequent year’s achievement, prior ability becomes even more predictive as students progress through the schooling years.

Modeling large survey data with an algorithmic approach, however, is not without its difficulties. In this talk we will also discuss the challenges of using machine learning on panel survey data where (i) there are only a few time points, (ii) a nested data structure (students within classes and schools), (iii) measurement error (associated with the self-report and test measures of psychological constructs), (iv) missing data due to drop-out over time, and (v) effects of multicollinearity on the feature importance analysis.

To conclude, machine learning methodologies offer Educational Psychology the potential for new insights via the comparative assessment of predictive effects, as well as how they vary over time. However, there is still work to be done in designing machine learning pipelines which deal well with the aforementioned challenges that come with large scale survey data.